Surviving the Squeeze: A Clinician's and Builder's Guide to HealthTech's Messy Middle

Whether you’re on the clinical front lines or building the technology that supports them, the last few years have felt like a case of whiplash. We rocketed from a period of "growth at all costs", fueled by unprecedented venture capital and promises of total transformation, straight into an era of economic constraint, layoffs, and a relentless focus on the "path to profitability."

For those of us in the trenches, this shift isn't just a headline; it's a tangible change in our daily reality. It’s creating a unique and challenging environment: a "messy middle" caught between the immense pressures of today's economy and the revolutionary promise of tomorrow's technology.

This isn't just a patient-facing issue. It's a professional crisis for the very people meant to be healing and innovating. Today, I want to dissect this awkward gap from both sides of the screen; from the perspective of the clinicians using the tools and the health tech professionals building them.

The Anatomy of the Awkward Gap

To navigate this period, we first have to understand the two powerful, opposing forces squeezing the industry.

1. The Economic Headwinds (The "Now") The financial landscape has fundamentally changed. The era of ZIRP (Zero Interest Rate Policy) is over, which means capital is no longer cheap. Venture funding for healthtech has tightened dramatically, and the metrics have shifted from user growth to hard ROI.

For health tech companies, this means the directive from the board is no longer "disrupt," but "survive." The focus is on conserving cash, achieving profitability, and reducing burn rate, which has led to widespread layoffs. These cuts often hit "cost centers" first; the crucial but hard-to-quantify teams in customer success, implementation, and forward-looking R&D.

For hospitals and health systems, the pressure is just as intense. Facing their own razor-thin margins, CFOs have become the primary gatekeepers for any new technology purchase. A tool that doesn't demonstrate a clear, near-term return on investment, either through cost savings or proven efficiency gains, is a non-starter.

2. The AI Horizon (The "Next") Simultaneously, we're living through an AI revolution that promises to solve healthcare's most intractable problems. The vision is no longer science fiction; it’s a tangible roadmap featuring:

- Ambient Intelligence: AI scribes that passively listen to a clinical encounter and auto-generate a complete, accurate note, order labs, and draft referral letters.

- Predictive Analytics: Algorithms that can identify patients at high risk for sepsis or readmission before they decompensate, allowing for proactive intervention.

- Generative AI & LLMs: Tools that can automate the soul-crushing process of prior authorizations or summarize a 500-page patient record into a concise clinical summary.

Here’s the fundamental problem: The economic cuts are happening today. The AI-powered solutions, while incredibly promising, are not yet seamlessly integrated, universally trusted, or capable of navigating the last-mile complexities of clinical workflow. We are living in the gap between the drawdown of human-powered support and the ramp-up of AI-powered automation.

The View from the Front Lines: A Clinician's Reality

For doctors and nurses ( and I'm waving at you too pharmacists!), this "messy middle" isn't a strategic challenge; it's a daily burden.

You were promised technology that would reduce burnout, but the human support for the systems you already have is thinning out. When your EHR freezes mid-shift or a third-party application fails, the dedicated support specialist you used to call has likely been replaced by a generic ticketing system with a 24-hour response time. You’re forced to become a part-time IT specialist, a role you were never trained for and that takes you away from patients.

This is the downstream effect of health tech’s financial squeeze. The "technical debt" accrued over years of using clunky, non-interoperable EHRs is now compounded by a "support debt," and clinicians are the ones paying the interest with their time and sanity. Many of you have experienced "pilot program purgatory": participating in an exciting trial of a new AI tool that actually saves you time, only to see it shelved after six months due to budget cuts, forcing you back to the old, inefficient workflow.

The View from the Trenches: A Health Tech Professional's Dilemma

For those of you building the products, this period is just as fraught with tension. You entered this field to solve problems for clinicians, yet you’re caught between your users' needs and your company's financial imperatives.

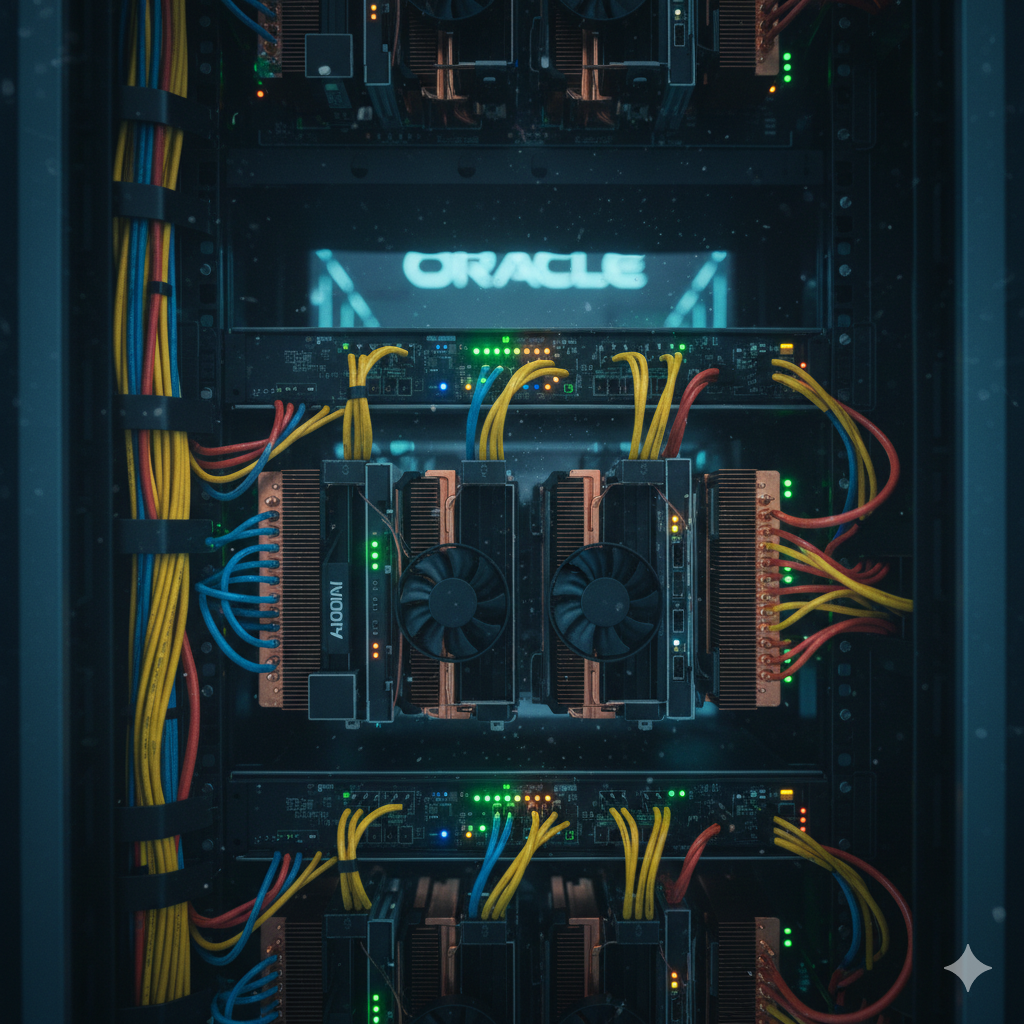

The game has shifted from "disruption" to "integration." A slick UI is no longer enough. To succeed, your product must now flawlessly integrate with the complex, legacy ecosystems of Epic, Oracle/Cerner, and others. This requires deep institutional knowledge and robust engineering resources; the very things that are often downsized in a layoff.

You understand the customer success crisis intimately. You know that in healthcare, a product is only as good as its training, implementation, and adoption. Yet you watch as those very teams are cut, knowing that it will lead to failed deployments and frustrated users down the line.

Most painfully, you're under immense pressure to "show ROI now." The 18-to-24-month sales and implementation cycle that's standard in healthcare is at odds with a board that needs to see positive ROI in 6 to 12 months. This forces difficult product decisions, prioritizing features that look good on a sales deck over foundational improvements that solve deep, systemic workflow problems for your clinical partners.

Dr. Matt's Take: Forging a Path Through the Middle

This "messy middle" is one of the greatest leadership challenges our industry has faced. But it is also a powerful clarifying moment. The hype has evaporated, and only real, durable value will survive. To get to the other side, both clinicians and builders must adapt their approach.

For Clinicians: Your voice has never been more critical. Stop accepting technology that adds to your workload. Become demanding, educated customers. Your detailed feedback on workflow inefficiencies is the most valuable commodity in healthtech. Champion the tools that give you time back and band together to demand that your administration invests in them. Don't settle for "good enough."

For Health Tech Professionals: This is a flight to quality. The companies that win this next decade will be those that are obsessively focused on solving a specific, painful clinical problem completely. They will treat clinicians as essential design partners, not just end-users. They will over-invest in implementation and support, recognizing that trust is the ultimate currency. Your mission is to be the voice of the user in every meeting, relentlessly advocating for solutions that deliver real, measurable value, not just hype.

The bridge across this gap will be built on a foundation of co-creation and trust. We have a once-in-a-generation opportunity to build a truly intelligent, efficient, and humane healthcare system. But we can only do it together.

#StayCrispy and informed,

-Dr. Matt